What is Load balancer?

In the context of application auto scaling, a load balancer is used to distribute incoming traffic to multiple instances of an application. As the traffic to the application increases, more instances of the application are automatically spawned to handle the additional load, and the load balancer automatically directs traffic to these new instances. Conversely, as traffic decreases, instances that are no longer needed are terminated, and the load balancer stops directing traffic to them. This allows the system to automatically scale up or down based on network traffic.

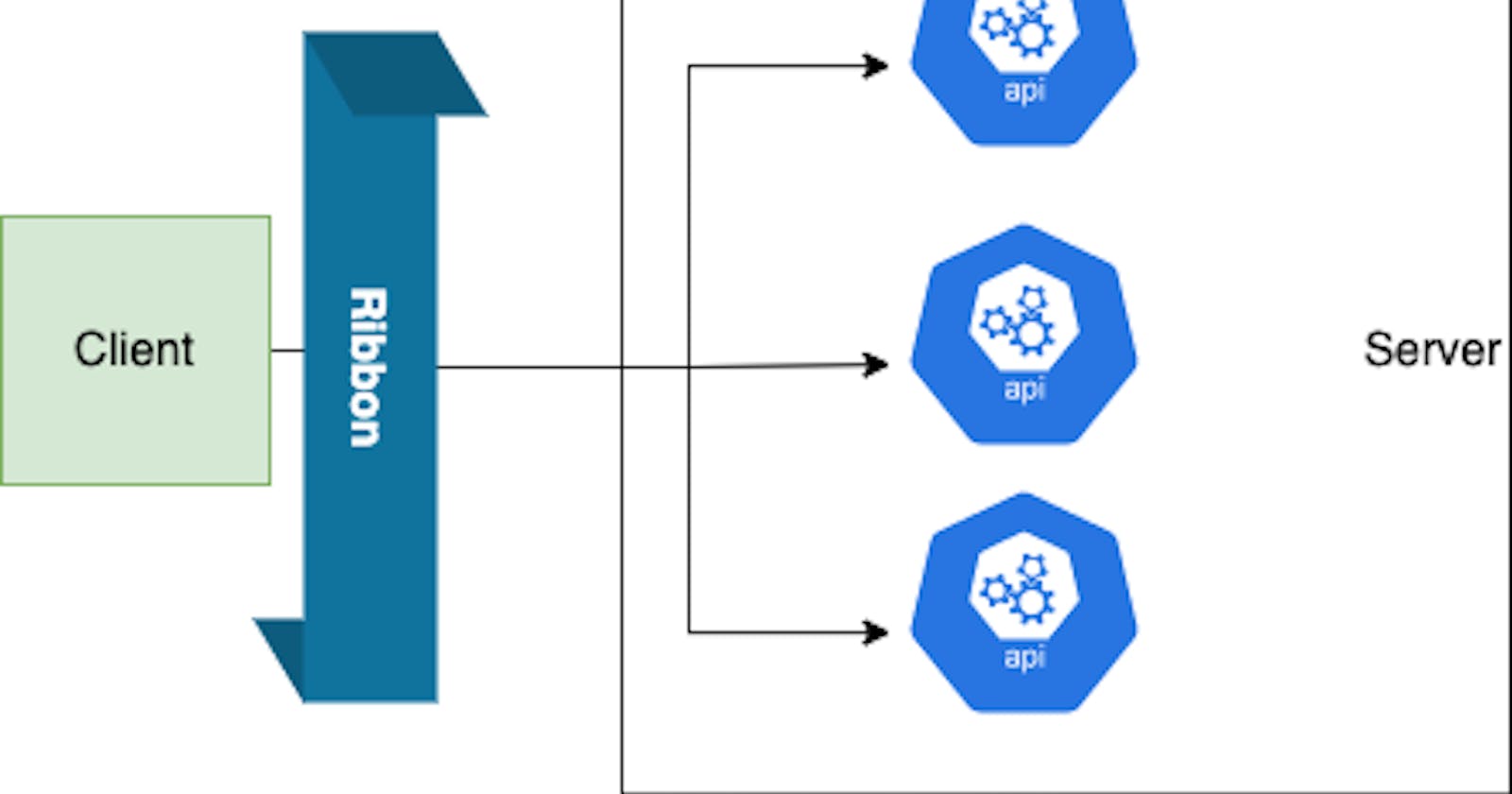

Client side Load balancing

Client-side load balancing is a technique where the client is responsible for distributing incoming requests to different servers. This is typically done by the client using a load-balancing algorithm to select the server to which the request should be sent. The client can use various algorithms such as round-robin, least connections, or IP-hash to decide which server to send a request to. This approach is also known as client-side service discovery.

This approach can be more efficient as the clients can make decisions based on the actual traffic, but it can be more complex to implement and manage. Additionally, the client has to have knowledge about all the available servers, their IPs and ports, and also it need to implement the load balancing algorithm.

Server side Load Balancing

Server-side load balancing is a technique where the server is responsible for distributing incoming requests to different servers. This is typically done by a dedicated load balancer device or software that sits between the client and the servers.

In server-side load balancing, the load balancer sits in front of the servers and acts as a single point of contact for the clients. All incoming traffic is directed to the load balancer, which then uses a load balancing algorithm to decide which server to forward the request to. The load balancer can use various factors such as server load, response time, and server health to make this decision.

This approach is simpler to implement and manage, as all traffic goes through a central point, but there might be chance of a single point of failure if the configuration is not done correctly.

What are the popular Load Balancers

Some popular load balancers that can be used to help with application auto-scaling include:

Amazon Elastic Load Balancer (ELB) — This is a load balancer service provided by Amazon Web Services (AWS) that automatically scale to handle varying levels of traffic.

Azure Load Balancer — This is a load balancer service provided by Microsoft Azure that can automatically distribute incoming traffic across multiple instances of an application.

HAProxy — This is a widely used open-source load balancer that can be configured to automatically scale an application based on the current level of traffic.

NGINX — This is a popular open-source web server and reverse proxy that can also be used as a load balancer. It has an auto-scaling feature which can help to scale up and down the instances.

Google Cloud Load Balancer — This is a load balancer service provided by Google Cloud Platform that automatically scales to handle varying levels of traffic.

Summary

A load balancer is an essential component in building scalable and highly available applications. It distributes incoming traffic across multiple servers, enabling automatic scaling of an application as traffic increases or decreases. Load balancers also ensure high availability by directing traffic to multiple instances of an application, so that if one instance fails, traffic is automatically directed to the remaining instances. They also improve the performance of an application by distributing traffic evenly across all available instances, avoiding any one instance from becoming overwhelmed. Additionally, load balancers provide other features such as SSL termination, content-based routing, and health checking, which can help to improve the security and reliability of an application.